You are reading help file online using chmlib.com

|

You are reading help file online using chmlib.com

|

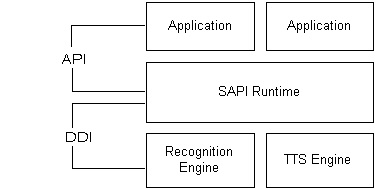

The SAPI application programming interface (API) dramatically reduces the code overhead required for an application to use speech recognition and text-to-speech, making speech technology more accessible and robust for a wide range of applications.

This section covers the following topics:

The SAPI API provides a high-level interface between an application and speech engines. SAPI implements all the low-level details needed to control and manage the real-time operations of various speech engines.

The two basic types of SAPI engines are text-to-speech (TTS) systems and speech recognizers. TTS systems synthesize text strings and files into spoken audio using synthetic voices. Speech recognizers convert human spoken audio into readable text strings and files.

Applications can control text-to-speech (TTS) using the ISpVoice Component Object Model (COM) interface. Once an application has created an ISpVoice object (see Text-to-Speech Tutorial), the application only needs to call ISpVoice::Speak to generate speech output from some text data. In addition, the IspVoice interface also provides several methods for changing voice and synthesis properties such as speaking rate ISpVoice::SetRate, output volume ISpVoice::SetVolume and changing the current speaking voice ISpVoice::SetVoice

Special SAPI controls can also be inserted along with the input text to change real-time synthesis properties like voice, pitch, word emphasis, speaking rate and volume. This synthesis markup sapi.xsd, using standard XML format, is a simple but powerful way to customize the TTS speech, independent of the specific engine or voice currently in use.

The IspVoice::Speak method can operate either synchronously (return only when completely finished speaking) or asynchronously (return immediately and speak as a background process). When speaking asynchronously (SPF_ASYNC), real-time status information such as speaking state and current text location can polled using ISpVoice::GetStatus. Also while speaking asynchronously, new text can be spoken by either immediately interrupting the current output (SPF_PURGEBEFORESPEAK), or by automatically appending the new text to the end of the current output.

In addition to the ISpVoice interface, SAPI also provides many utility COM interfaces for the more advanced TTS applications.

SAPI communicates with applications by sending events using standard callback mechanisms (Window Message, callback proc or Win32 Event). For TTS, events are mostly used for synchronizing to the output speech. Applications can sync to real-time actions as they occur such as word boundaries, phoneme or viseme (mouth animation) boundaries or application custom bookmarks. Applications can initialize and handle these real-time events using ISpNotifySource, ISpNotifySink, ISpNotifyTranslator, ISpEventSink, ISpEventSource, and ISpNotifyCallback.

Applications can provide custom word pronunciations for speech synthesis engines using methods provided by ISpContainerLexicon, ISpLexicon and ISpPhoneConverter.

Finding and selecting SAPI speech data such as voice files and pronunciation lexicons can be handled by the following COM interfaces: ISpDataKey, ISpRegDataKey, ISpObjectTokenInit, ISpObjectTokenCategory, ISpObjectToken, IEnumSpObjectTokens, ISpObjectWithToken, ISpResourceManager and ISpTask.

Just as ISpVoice is the main interface for speech synthesis, ISpRecoContext is the main interface for speech recognition. Like the ISpVoice, it is an ISpEventSource, which means that it is the speech application's vehicle for receiving notifications for the requested speech recognition events.

An application has the choice of two different types of speech recognition engines (ISpRecognizer). A shared recognizer that could possibly be shared with other speech recognition applications is recommended for most speech applications. To create an ISpRecoContext for a shared ISpRecognizer, an application need only call COM's CoCreateInstance on the component CLSID_SpSharedRecoContext. In this case, SAPI will set up the audio input stream, setting it to SAPI's default audio input stream. For large server applications that would run alone on a system, and for which performance is key, an InProc speech recognition engine is more appropriate. In order to create an ISpRecoContext for an InProc ISpRecognizer, the application must first call CoCreateInstance on the component CLSID_SpInprocRecoInstance to create its own InProc ISpRecognizer. Then the application must make a call to ISpRecognizer::SetInput (see also ISpObjectToken) in order to set up the audio input. Finally, the application can call ISpRecognizer::CreateRecoContext to obtain an ISpRecoContext.

The next step is to set up notifications for events the application is interested in. As the ISpRecognizer is also an ISpEventSource, which in turn is an ISpNotifySource, the application can call one of the ISpNotifySource methods from its ISpRecoContext to indicate where the events for that ISpRecoContext should be reported. Then it should call ISpEventSource::SetInterest to indicate which events it needs to be notified of. The most important event is the SPEI_RECOGNITION, which indicates that the ISpRecognizer has recognized some speech for this ISpRecoContext. See SPEVENTENUM for details on the other available speech recognition events.

Finally, a speech application must create, load, and activate an ISpRecoGrammar, which essentially indicates what type of utterances to recognize, i.e., dictation or a command and control grammar. First, the application creates an ISpRecoGrammar using ISpRecoContext::CreateGrammar. Then, the application loads the appropriate grammar, either by calling ISpRecoGrammar::LoadDictation for dictation or one of the ISpRecoGrammar::LoadCmdxxx methods for command and control. Finally, in order to activate these grammars so that recognition can start, the application calls ISpRecoGrammar::SetDictationState for dictation or ISpRecoGrammar::SetRuleState or ISpRecoGrammar::SetRuleIdState for command and control.

When recognitions come back to the application by means of the requested notification mechanism, the lParam member of the SPEVENT structure will be an ISpRecoResult by which the application can determine what was recognized and for which ISpRecoGrammar of the ISpRecoContext.

An ISpRecognizer, whether shared or InProc, can have multiple ISpRecoContexts associated with it, and each one can be notified in its own way of events pertaining to it. An ISpRecoContext can have multiple ISpRecoGrammars created from it, each one for recognizing different types of utterances.